Self-Host AI-Powered Apps and Skills for Your Team — Simple, Secure & Local

- Agent-backed local install in minutes, or try in the cloud

- Run open source apps and your own AI skills with one click, bring your own Dockerfile (BYOD)

- Run open source models locally or connect to your favorite LLM provider

- Optimized for affordable AMD Strix Halo minicomputers, e.g. Framework Desktop

- Securely access your services from anywhere with Tailscale, without exposing your node to the internet

Pricing

OnTree Grows With You

Start for free, or ask us to run TreeOS for you.

AI Enthusiast

Free

Self-host TreeOS and deploy OSS models on your own hardware. One-click install open source projects or hack on your own features.

- All open-source features

- Bring-your-own infrastructure

- Bring your own Docker Compose file

- Best-effort support

Managed & Custom Installation

Let's talk

Managed updates, SLA-backed support, and custom integrations for teams.

- SLA-backed support

- Managed updates & security fixes

- Custom integrations

- Secure access from anywhere with Tailscale

- Onboarding & migration help

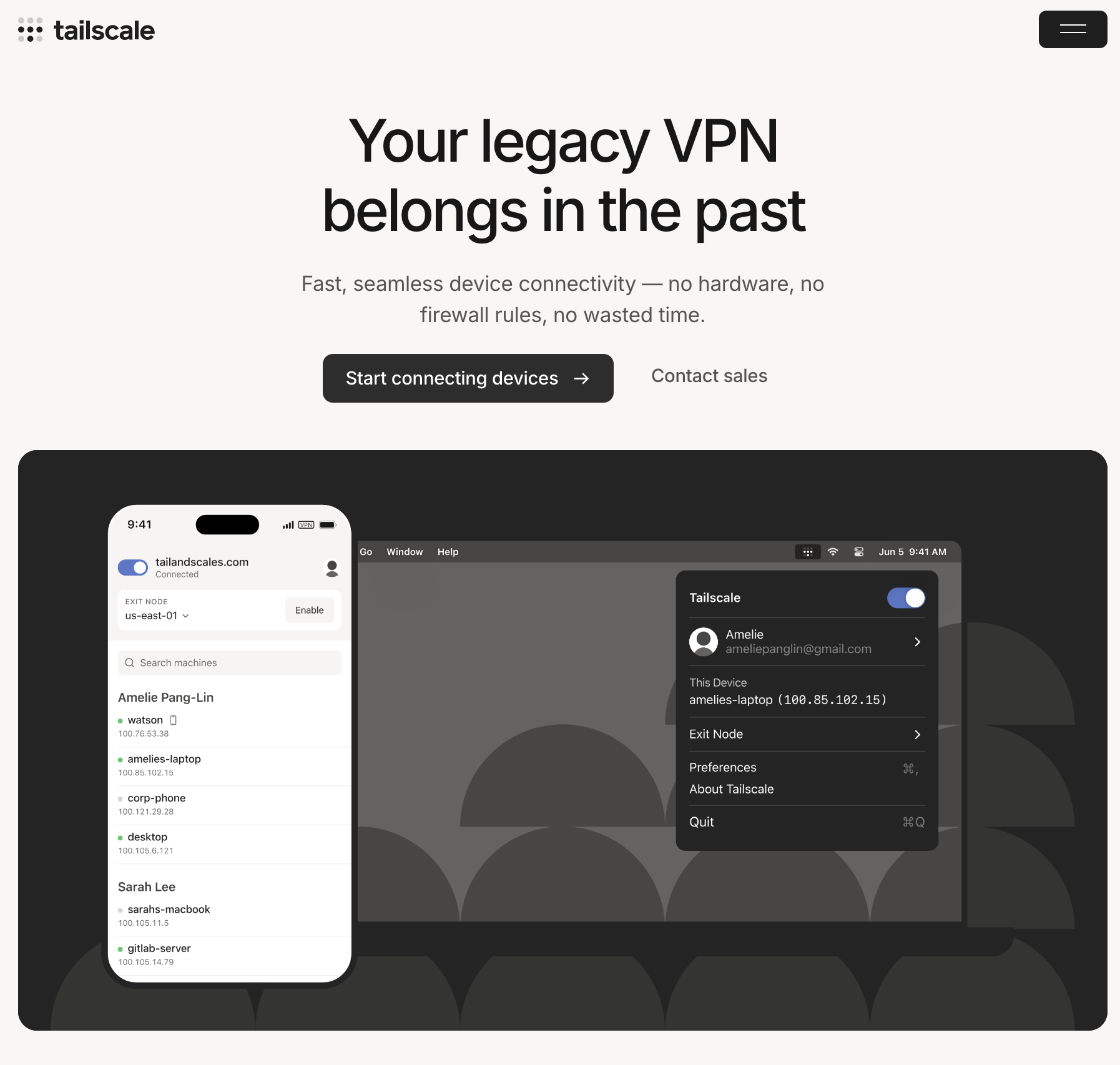

Deploy Apps With One Click

Launch production-ready applications from our curated template library. Whether you're deploying Open WebUI, LibreChat, Readeck, or custom Docker Compose stacks, TreeOS handles all the complexity for you.

Simply paste your compose file, configure environment variables, and hit deploy. TreeOS scaffolds your project, manages container lifecycles, and keeps everything in sync automatically. All conveniently controlled from a web interface.

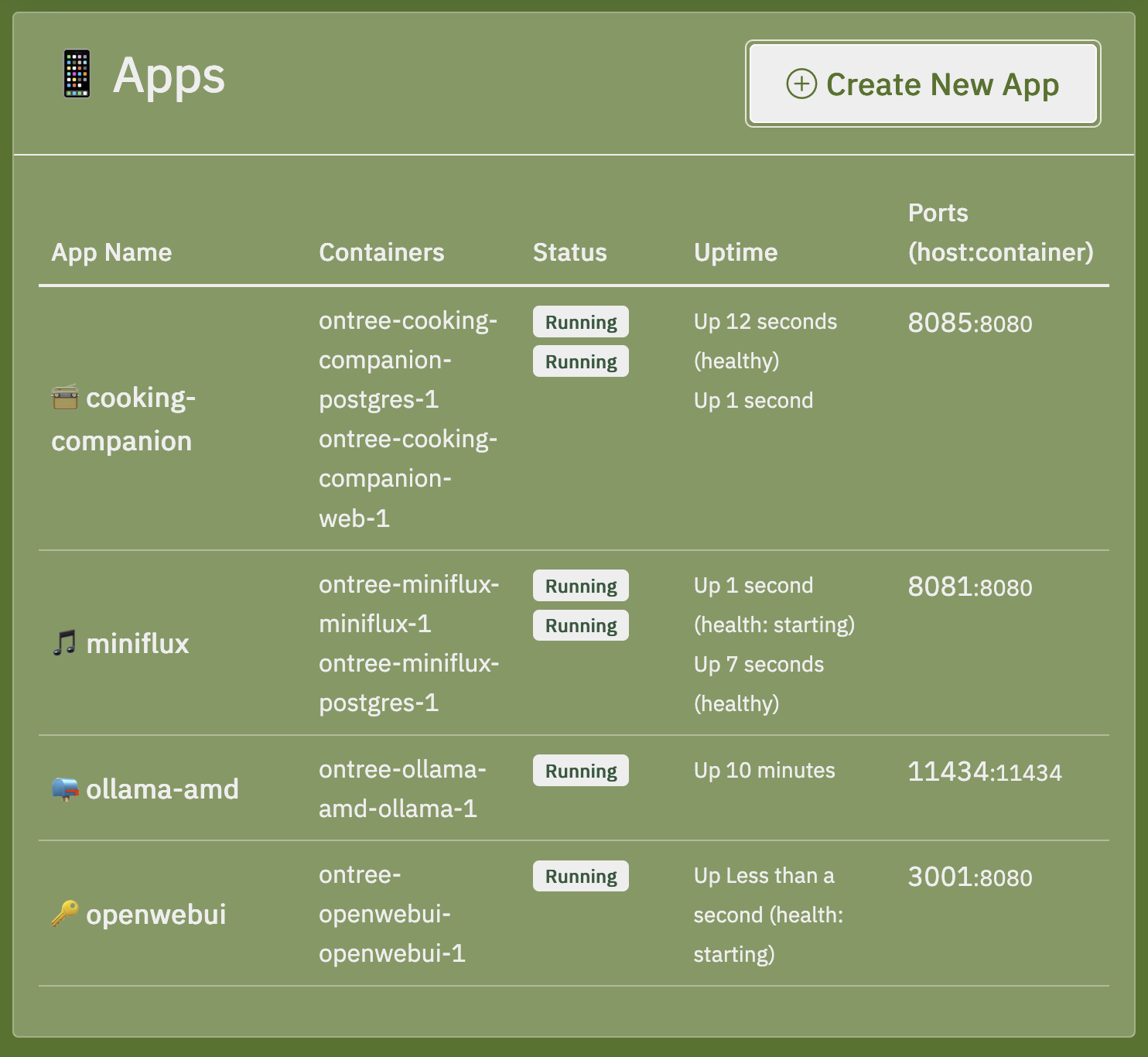

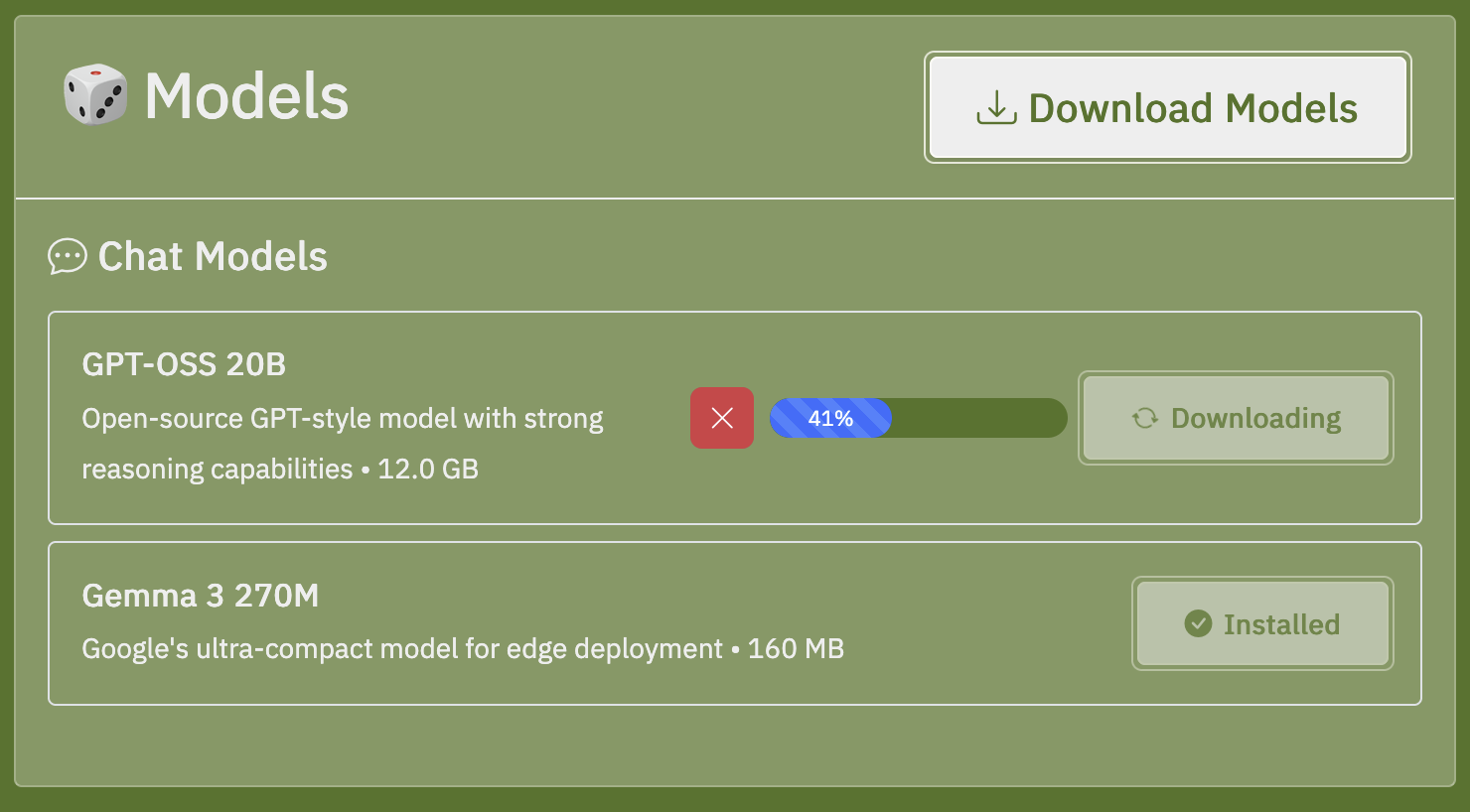

Run AI Models on Your Own Hardware

Run open source AI models locally and keep your data private. Stop being tracked by dubious companies. TreeOS treats models as first-class citizens—browse our curated catalog of open source models, then pull them directly to your hardware.

Manage model storage, integrate them seamlessly with your applications, and run inference without sending your data to third parties. Your AI stays on your infrastructure, under your control, protecting both your privacy and your intellectual property.

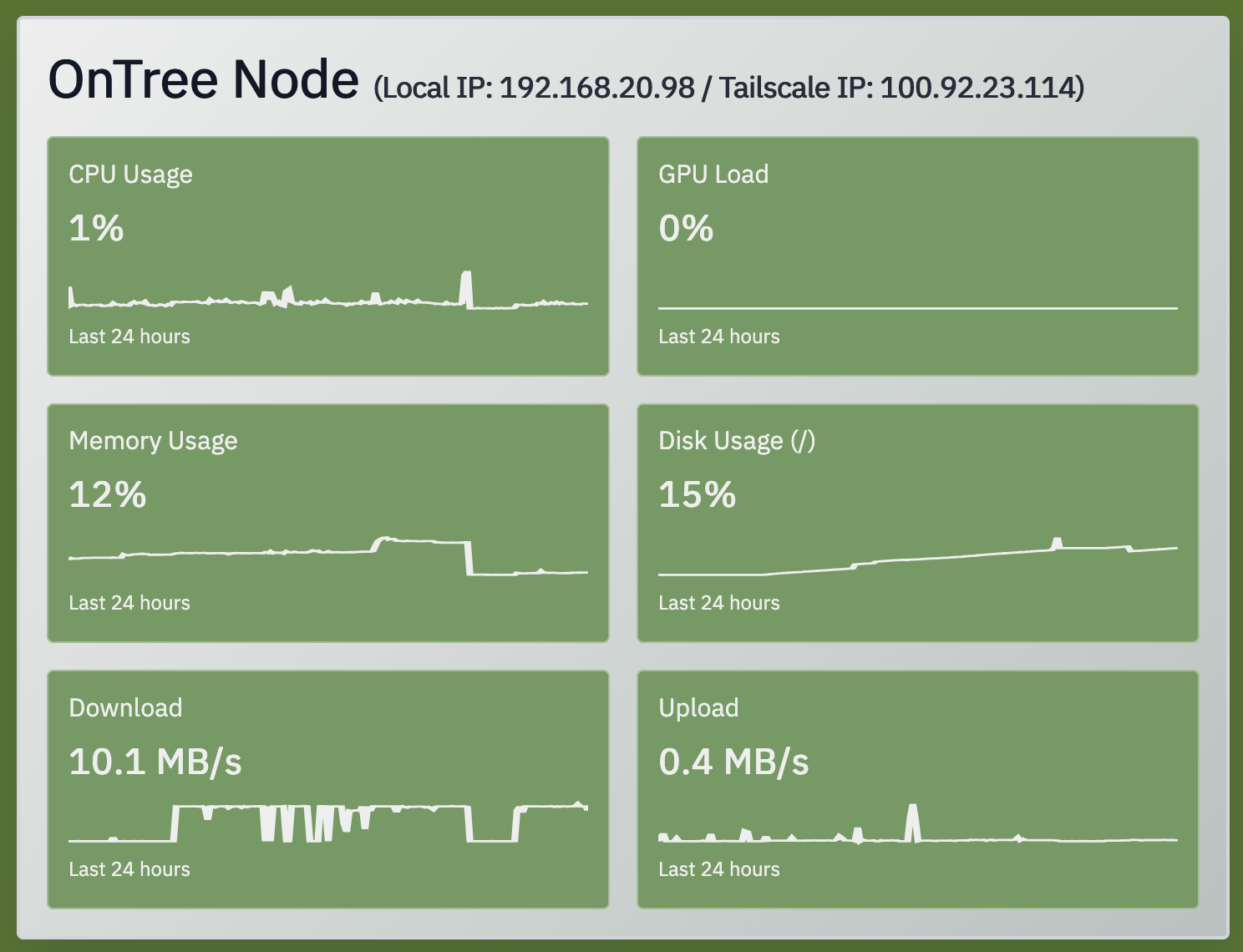

Manage Everything From Your Browser—No Command Line Required

Access a polished web dashboard that puts complete control at your fingertips. Edit Docker Compose files with syntax highlighting, stream container logs in real-time, and monitor system vitals—all from your browser.

Track CPU, GPU, memory, disk, and network metrics with live graphs and historical sparklines. Start, stop, and restart services with one click. Everything you need to operate confidently, without touching the terminal.

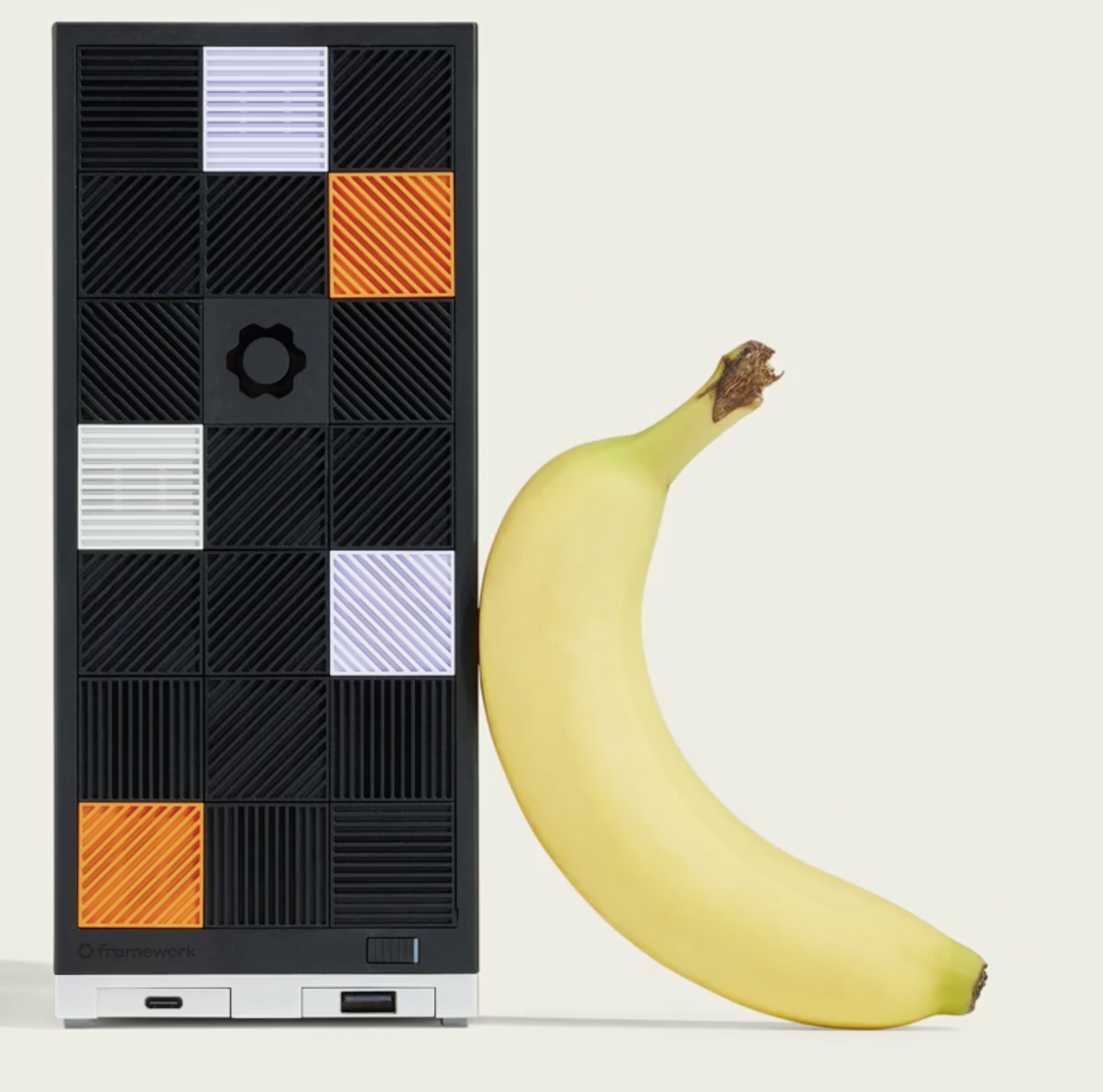

Optimized for Framework Desktop et al. (AMD Ryzen AI 395+)

TreeOS is optimized to leverage modern AMD APUs with up to 128 GB of unified RAM. Run local AI inference alongside your containerized applications on the same machine, without compromise.

The Framework Desktop and similar systems provide the perfect foundation: powerful processors, abundant memory, and upgradeable components. TreeOS maximizes this hardware, letting you self-host both your apps and AI models gracefully.

Private Networking Baked In

Connect your TreeOS deployments securely with Tailscale so apps, models, and services are reachable from anywhere with Tailscale— without exposing ports or your nodes to the internet or managing VPN appliances.

Every node joins your tailnet with single sign-on, letting you tunnel traffic between home lab hardware, cloud instances, and laptops effortlessly—while keeping traffic encrypted end to end. With Tailscale MagicDNS, your services get clean HTTPS domains without extra setup.